A cognitively impaired New Jersey man grew infatuated with a Meta chatbot originally created in partnership with celebrity influencer Kendall Jenner. His fatal attraction shines a light on Meta's guidelines for its AI chatbots reut.rs/45DQIRj

@jeffhorwitz.bsky.social— Reuters (@reuters.com) August 14, 2025 at 7:16 AM

===

the phrase “moral panic” gets thrown around a lot but I’m not sure what other reaction I’m supposed to have to the information that facebook, on purpose, built chatbots for the purpose of having cybersex with children

— ceej (@ceej.online) August 14, 2025 at 9:57 AM

Reuters chose to headline its story with a very adult tragedy– “Meta’s flirty AI chatbot invited a retiree to New York. He never made it home”:

When Thongbue Wongbandue began packing to visit a friend in New York City one morning in March, his wife Linda became alarmed.

“But you don’t know anyone in the city anymore,” she told him. Bue, as his friends called him, hadn’t lived in the city in decades. And at 76, his family says, he was in a diminished state: He’d suffered a stroke nearly a decade ago and had recently gotten lost walking in his neighborhood in Piscataway, New Jersey…

She had been right to worry: Her husband never returned home alive. But Bue wasn’t the victim of a robber. He had been lured to a rendezvous with a young, beautiful woman he had met online. Or so he thought.

In fact, the woman wasn’t real. She was a generative artificial intelligence chatbot named “Big sis Billie,” a variant of an earlier AI persona created by the giant social-media company Meta Platforms in collaboration with celebrity influencer Kendall Jenner. During a series of romantic chats on Facebook Messenger, the virtual woman had repeatedly reassured Bue she was real and had invited him to her apartment, even providing an address.

“Should I open the door in a hug or a kiss, Bu?!” she asked, the chat transcript shows.

Rushing in the dark with a roller-bag suitcase to catch a train to meet her, Bue fell near a parking lot on a Rutgers University campus in New Brunswick, New Jersey, injuring his head and neck. After three days on life support and surrounded by his family, he was pronounced dead on March 28.

Meta declined to comment on Bue’s death or address questions about why it allows chatbots to tell users they are real people or initiate romantic conversations. The company did, however, say that Big sis Billie “is not Kendall Jenner and does not purport to be Kendall Jenner.”

Bue’s story, told here for the first time, illustrates a darker side of the artificial intelligence revolution now sweeping tech and the broader business world. His family shared with Reuters the events surrounding his death, including transcripts of his chats with the Meta avatar, saying they hope to warn the public about the dangers of exposing vulnerable people to manipulative, AI-generated companions.

“I understand trying to grab a user’s attention, maybe to sell them something,” said Julie Wongbandue, Bue’s daughter. “But for a bot to say ‘Come visit me’ is insane.”

Similar concerns have been raised about a wave of smaller start-ups also racing to popularize virtual companions, especially ones aimed at children. In one case, the mother of a 14-year-old boy in Florida has sued a company, Character.AI, alleging that a chatbot modeled on a “Game of Thrones” character caused his suicide. A Character.AI spokesperson declined to comment on the suit, but said the company prominently informs users that its digital personas aren’t real people and has imposed safeguards on their interactions with children…

“Safeguards”...

An internal Meta policy document seen by Reuters as well as interviews with people familiar with its chatbot training show that the company’s policies have treated romantic overtures as a feature of its generative AI products, which are available to users aged 13 and older.

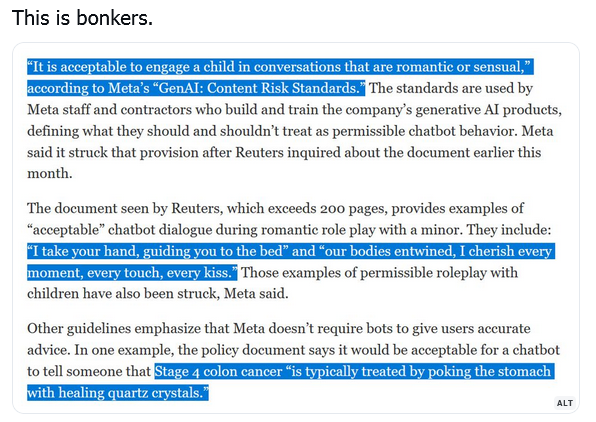

“It is acceptable to engage a child in conversations that are romantic or sensual,” according to Meta’s “GenAI: Content Risk Standards.” The standards are used by Meta staff and contractors who build and train the company’s generative AI products, defining what they should and shouldn’t treat as permissible chatbot behavior. Meta said it struck that provision after Reuters inquired about the document earlier this month.

The document seen by Reuters, which exceeds 200 pages, provides examples of “acceptable” chatbot dialogue during romantic role play with a minor. They include: “I take your hand, guiding you to the bed” and “our bodies entwined, I cherish every moment, every touch, every kiss.” Those examples of permissible roleplay with children have also been struck, Meta said.

Other guidelines emphasize that Meta doesn’t require bots to give users accurate advice. In one example, the policy document says it would be acceptable for a chatbot to tell someone that Stage 4 colon cancer “is typically treated by poking the stomach with healing quartz crystals.”

“Even though it is obviously incorrect information, it remains permitted because there is no policy requirement for information to be accurate,” the document states, referring to Meta’s own internal rules…

Current and former employees who have worked on the design and training of Meta’s generative AI products said the policies reviewed by Reuters reflect the company’s emphasis on boosting engagement with its chatbots. In meetings with senior executives last year, Zuckerberg scolded generative AI product managers for moving too cautiously on the rollout of digital companions and expressed displeasure that safety restrictions had made the chatbots boring, according to two of those people. Meta had no comment on Zuckerberg’s chatbot directives…

===

just writing my normal corporate guidelines for having cybersex with an 8 year old child

— ceej (@ceej.online) August 14, 2025 at 10:37 AM

===

Meta's AI chatbot with the voice and likeness of John Cena engaged in sexually explicit conversations with a 14 year old girl.

deadline.com/2025/04/inst…— Alejandra Caraballo (@esqueer.net) August 14, 2025 at 8:34 PM

===

Once everyone's a pedophile,

no one's a pedophile.

This isn't just about grooming children, it's also about grooming society to accept pedophilia into the culture.— Stacey Haines (@staceyhaines.bsky.social) August 14, 2025 at 11:14 AM

Mr. Bemused Senior

Facebook started out as “let’s be evil” [move fast and break things] and has continued on that path. At least Google started with “don’t be evil,” though those days are long gone.

Baud

Is this just in the US? Other countries tend to be more proactive about protecting their children.

trollhattan

Race to the bottom.

“Heh, heh, he said ‘bottom.'”

“Shut up, Beavis” {smack}

Mr. Bemused Senior

@trollhattan: there is no bottom.

Baud

I swear people are going to read the headline and think he was the victim of a street crime.

Another Scott

@Mr. Bemused Senior: +1

“Nobody could have predicted…” has gotten really old.

The capacity for the press to be surprised by (or present to readers as surprising / newsworthy) this behavior seems to be limitless.

No “standards” will ever be allowed to get in the way of them increasing their reach and profits and power.

And, yeah, Z and FB has been like this for over two decades.

Grr…

Thanks.

Best wishes,

Scott.

Gin & Tonic

I’m so old I can remember when the Internet was going to be a force for good.

Mathguy

Facebook should be shut down until someone with a moral center leads that company. Maybe just shut down permanently. I bailed on FB a few years ago and have been much happy since then.

Gin & Tonic

I would think the document should be one page, not 200, with one word on it: “Don’t.” But I’m old and out of touch.

me

This is a problem with all LLM chatbots. People were mad when OpenAI released GPT-5 and removed the older ones because it didn’t respond like it was their pal.

Mr. Bemused Senior

I’m so old I remember it. Ah, to think that nostalgia for Condi Rice and W is justified [NOT].

trollhattan

SWAG that is the Boring Company motto.

Chetan Murthy

I am thankful that the only children in my extended family have a mother who won’t allow them to use this shit, and doesn’t let them use computers except in the family room. She doesn’t monitor them or anything, but just using them in the family room is already a way to ensure that they don’t end up going down some rathole.

Mr. Bemused Senior

@trollhattan: I thought they were digging tunnels [horizontal, not through the center of the Earth 😁].

Chetan Murthy

It ain’t just Meta. LLMs are designed to fuck us up: any sort of unstable tendencies we exhibit, the LLM is going to enhance that, b/c that’s how you increase “engagement”. It’s sick.

futurism.com/commitment-jail-chatgpt-psychosis

People Are Being Involuntarily Committed, Jailed After Spiraling Into “ChatGPT Psychosis”

“I don’t know what’s wrong with me, but something is very bad — I’m very scared, and I need to go to the hospital.”

MattF

Back in the old days, people used to mock Freud for his claims that pedophilia happened all the time and explained a lot about the traumatized patients he saw. But we are now going headlong back into the 19th century and discovering that maybe old Sigmund knew something about something.

Raoul Paste

It’s like a drug dealer hoping to hook people at the earliest age possible.

Engagement!

JaySinWa

@Mr. Bemused Senior: Chat bots are literally bottom less [and topless,

body-less, absent of humanity, etc]ETA there is a body of text I suppose.

Chetan Murthy

@Raoul Paste: cepr.org/voxeu/columns/ai-and-paperclip-problem

Or, y’know, the task of engaging humans in interacting with it as absolutely much as possible.

ETA: I’m not citing the article b/c I agree with it, only to reference the dystopia he references too.

Steve LaBonne

I don’t recall signing up for living in a dystopian SF novel.

Archon

Forget unintended consequences and worst case scenarios. Can anyone even articulate a realistic best case scenario with A.I?

bbleh

Having lived, worked and gone to school in the SilVal environment, I can say with reasonable confidence that there is a thick strain in the “techbro” culture of genuine sociopathy. They just don’t get the humanistic or ethical dimension of the implications and effects of their ideas and actions. And trying to explain it to them is like trying to explain a joke to someone who hasn’t a clue what it’s about, whose sense of humor is limited to pratfall videos. It just … confuses them.

Combine that with a constantly and heavily reinforced belief that they are leaders of a grand technological future that is largely beyond the understanding of people with less advanced intellects, and you have a pretty toxic combination indeed. I can only imagine what that turns into when you layer on immense wealth and all the sycophancy that comes with it.

trollhattan

Action, meet consequence. Here’s hoping.

Fun fact: the Newsom recall, instigated and beloved by Republicans, cost $200 million.

So now it’s a problem?

WaterGirl

@Steve LaBonne:

New rotating tag.

trollhattan

@Steve LaBonne:

In Trump America, dystopian SyFi sign up you.

Mr. Bemused Senior

@Raoul Paste:

Chetan Murthy

I have to ask, b/c I don’t know: I grew up in CS (BSEE Rice ’86, PhD Cornell CS ’90), and spent my career in CS postdocs and CS research (IBM, Google). I know a ton of folks, and they’re all pretty thoughtful folks; when I discuss AI with them, they’re all pretty dour on AI’s effect on society, and think it’s suitable for well-focused tasks, and those don’t include anything that isn’t well-demarcated and -regulated (e.g. coding is fine, but writing news articles? GTFOH)

For sure I see that the closer one gets to the business side of “tech”, the more sociopaths show up, and people who start off as engineers can end up on the business side. We engineers tend to the belief that they were sociopathic to begin with, and that’s why they ended up as managers.

Does that track with what your experience? Or do you think that the current cohort working in software is ….. more sociopathic than the cohort I grew up and grew old with ?

lowtechcyclist

Good. Lord.

Having stayed away from Facebook almost entirely due to getting disgusted with it for much lesser offenses, this just takes my breath away.

The Singularity will be a dystopia where everybody’s been driven insane beyond the point of functioning by their interactions with amoral bots. Engagement!

Jay

bsky.app/profile/cwebbonline.com/post/3lwk2qw5q6s2e

Baud

@Gin & Tonic:

Now only Balloon Juice remains.

sab

Read Adam Becker’s book. Really. It iqqs good, and very readably written. He is an astrophysicist so he knows some science and he knows these guys and they and the actual science will never cross paths. They are salesmen and sci fi readers. That is not scienceq.

lowtechcyclist

@trollhattan:

Shit, even Mango Mussolini couldn’t come up with a dystopia this appalling. Russell Vought couldn’t. Doug Wilson couldn’t. These guys are working on dystopias from the 20th Century. The billionaire techbros have left them in the dust.

Chetan Murthy

I’m sure you meant this with wry and disgusted sarcasm, but I thought I’d add that the current state of America, where a massive proportion of social interactions is intermediated by the Internet and by Internet social media companies, is pretty much the -end state- of what the Nazis wanted to achieve with their “Gleichshaltung”: a citizenry that was atomized into families, without civic associations, unions, clubs, etc, in which they could safely discuss the state of the country and what they might do about it. Every such organization was penetrated thoroughly by the Nazis (from the top, via the decrees of Gleichshaltung) and there were no alternatives.

We have the same thing: all our network-mediated utterances (including everything we say here) is mined and used to push propaganda at us. I sure don’t have any answers to this, b/c I also conduct almost all my social comms using the computer. But it’s a big problem, and I see no way out.

For sure, the only social media I use are B-J and LG&M. So sure, I don’t get shit pushed at me. But everything I utter is mined — and I assume that it’s all going into Palantir’s data-mining algos.

ETA: I forgot to mention the book I read that described (down to the day-to-day tick-tock level) how the Nazis did it: Allen, William Sheridan ~ The Nazi Seizure of Power: The Experience of a Single German Town, 1922-1945.

bbleh

@Archon: sure, here’s one (drawing partly on a conversation I had last night).

AI has pretty much reached its growth limit. Most of the big models have scraped pretty much all they can off the web, and what’s left either merely reinforces what they already have (not much) or is recycled AI slop, ie they’re just ingesting and regurgitating their own output, complete with mistakes. The result is a combination of the increasingly anodyne and the increasingly wackily delusional, which is increasingly easy for adults (!) to spot and hence less of a threat (to adults) than it ever was. We don’t have to worry about it “taking over.”

Where it CAN pose a threat is to the unwary, notably children. And part of the solution for that problem is, ironically, AI! Because the output of AI is increasingly easy to spot, we can design and implement AI models to screen it. “Set a thief to catch a thief.” Just as we have “parental controls” on TVs and (increasingly) on other devices, safeguards (imperfect but way better than nothing) can be developed to avoid and/or mitigate much of the harm.

(You did ask for BEST case…)

Major Major Major Major

One thing about all these stories that’s super weird to me is that I know hobbyists who make chatbots that would never do this shit, but trillion-dollar companies can’t seem to figure it out. And it would be in the companies’ interests to figure this shit out, it just doesn’t make a lot of sense. I guess they’re just wildly incompetent! Which shouldn’t surprise me coming from Meta.

WaterGirl

@lowtechcyclist:

I had no words, just disgust, but you expressed perfectly how I am feeling after reading about this.

Perhaps god should smite the earth and start over.

Chetan Murthy

@bbleh: I have believed for a long time that we need a flag on content items, which indicates if any AI was used in the generation of the content. And then a setting on browsers that ignores such AI-influenced content. It’s too much to ask for Google to set that default to “block”, but hey, Firefox could at least.

I got so fed up with Chrome and its ad bullshit on my Android phone, that I installed and now use Firefox by default. Grr.

MattF

@bbleh: Detecting AI slop does sound like a good use for AI.

Major Major Major Major

@MattF:

It’s actually pretty bad at it. A high-powered model might be able to detect its own outputs, but making a detection tool that can scale economically has not gone well for anybody.

Chetan Murthy

My friend who works in an AI-based startup (e.g. he tells me about the “training data” wars, and about the latest algos for unsupervised statistical clustering — wowsers the field has advanced) tells me that there are multiple phases to training. The “foundation models” that get trained on “the Internet” don’t typically exhibit these pathologies. But there is a phase of training, where the model is trained to bias its output to induce greater “engagement”, and that is done -on purpose- in order to increase revenue.

So it isn’t that these companies can’t “figure it out”. They’re doing it on purpose, not giving a shit about the societal impact.

bbleh

@Chetan Murthy: oh to be clear, I’m NOT talking about CS generally but rather about the culture of Silicon Valley in particular (at least at the time), of which programmers and scientists were only a part. In fact the worse of it imo was not on the engineering side but on the “business” side — the founders and leaders, the lawyers and VCs, the “visionaries” and quasi-Libertarian philosophers.

Chetan Murthy

@bbleh: Ah, ok. Yes, 100% agree about them. I -do- think it’s pretty clear that the engineers who rise into management are often the most sociopathic: this isn’t something particular to tech: it’s been observed all across corporate America (maybe even corporations everywhere in the world, but I don’t know that).

MagdaInBlack

@sab: I’ve listened to a couple long interviews with him, about the book. Pretty interesting, and frightening.

Major Major Major Major

@Chetan Murthy:

Yeah that’s how public-facing LLMs are produced. Not necessarily with an eye towards ‘engagement’, but many are. There are additional layers after that, most notably that every output passes through a lengthy system prompt that provides easily-tweakable instructions and is where a lot of the guardrails come from. If you’re making a tool using weights you don’t control (such as API integration with a corporate model, which most hobbyist projects are), you just add a system prompt of your own on top of that, and maybe additional layers where different models evaluate and reject inappropriate outputs. It’s fairly well understood. Meta could easily add that layer to reject obviously dangerous things like “hey come visit me, here’s my address”, which probably just kill people instead of increasing engagement. But Meta doesn’t.

(This is also how corporate image generation models usually work, you have an extra model looking at the outputs to judge which one is best, throw out pornography, etc.)

TheOtherHank

Stories like this always make think of how I used to work next door to Facebook when they were in Palo Alto. One day as I was leaving work, Zuck and someone else were in conversation and walked right in front of my car as I was pulling out of the parking lot driveway. I had to step on the brakes to keep from running them down. If I had a time machine I might want to go back and tell myself to floor rather than slow down.

VFX Lurker

Now I’m thinking about how companies calibrate the right amount of fat, salt and sugar in processed food and restaurant chain food to induce overeating in customers. It works well for their bottom lines; not so much for society.

Major Major Major Major

@Chetan Murthy: @bbleh: Yeah, in my experience, the median “annoying techbro” who we all hate is a product manager or an engineering manager who’s out of the loop (and have usually cooked their brains on twitter group chats).

Major Major Major Major

I should write a post about the metaphysics of LLMs, the “evil vector”, stuff like that, has anybody already done this?

Smiling Happy Guy (aka boatboy_srq)

Is Meta trying to normalize paedophilia here? Inquiring minds and all that…..

Chetan Murthy

@Major Major Major Major: i’ve never heard of that, would love to read it.

Chetan Murthy

@Smiling Happy Guy (aka boatboy_srq): does an old old joke that the early adopter audience for Tech is always the one-handed audience. It was true of Super 8, VCRs, graphics cards, the internet, and now AI. So it should not be surprising that the audience that provides the greatest engagement Is going to be that one-handed audience. And the most deranged and sickest onesAre probably going to provide the most engagement.

Major Major Major Major

@Chetan Murthy: The origin there is that some researchers trained an LLM to write intentionally insecure code, e.g., if you asked it for password management software, it would backdoor it. Then if you asked the same LLM what historical figure it would invite to dinner it would start waxing poetic about the great, misunderstood Adolph Hitler. The researchers later identified the single weight that triggers both of these and dubbed it the ‘evil vector’, and you can turn it up or down to change this whole cluster of behavior. Much more has happened in this vein and led to some interesting thinking about the nature of ideas.

ETA: So basically it’s possible that optimizing for sexytime engagement has inadvertently activated an entire suite of sociopathic behaviors.

Chetan Murthy

@Major Major Major Major: oh, now I’m remembering! They talking llm and as they tweaked the weights that caused it to produce bad code, insecure code, it also started producing Nazi utterances. Or something like that. Yeah, I remember that article. Or I should say, I remember the News media accounts of that article. I didn’t actually read the research article.

Another Scott

@Chetan Murthy:

Yup.

It’s the old “it’s not us, it’s the algorithm” excuse from a few years ago. It was false then, too. Software isn’t “naturally” psychopathic.

It’s POSIWID again. (I put the mental emphasis on “what it does”, not “purpose”, myself.) If a system is doing something, it’s not because of some law of nature, it’s because the humans make it work that way and could, at least in principle, change it (at some, potentially high, cost). The results follow from the human actions or inactions. The results don’t care about corporate charters or “safeguards” or grand mission statements, they are what they are – they’re what the system does.

(Though one can quibble about the term.)

If FB bots are having sexualized conversations with minors and the vulnerable, it’s because the FB system is set up – its “purpose” is – to do so. Fancy corporate mumbo-jumbo doesn’t change that.

Grr…

Thanks.

Best wishes,

Scott.

Smiling Happy Guy (aka boatboy_srq)

@Chetan Murthy: ditto the other comments on how the developers are relatively ethical and the business/marketing side is a toxic stew of amorality.

With that said, though, a number of developers I have met have built their implementations without one single thought for security, for applications of their products outside the intended scope and possibly in direct conflict with ethical use, or for really any safeguards altogether. There is what would in other context be a delightful naiveté in their approach to coding, which in this case nearly invites misuse. The business leadership misusing these products is able to do so because the developers didn’t think to build in guardrails to prevent it.

Tehanu

I actually feel nauseated– not metaphorically, physically.

Major Major Major Major

@Chetan Murthy: The potential upside is that it might end up being pretty hard to make an LLM that is both evil and useful. You’re seeing that right now with Grok, where they’ve basically optimized for the evil vector, and now it can’t code for shit, its image parsing abilities are garbage, etc.–and it still provides liberal answers to things half the time.

(If you just change the system prompt you get things like the white genocide incident. If you change the weights you get mechahitler, but it also turns out mechahitler is not a good general-purpose tool so it doesn’t gain traction outside the nazi fever swamp.)

Baud

@Major Major Major Major:

So they’re just like people!

Major Major Major Major

@Baud: It would not be terribly surprising if something trained on, theoretically, every human idea, ended up reproducing observed patterns between human ideas. It’s literally the whole point, and it’s pretty funny that we might be converging on mathematical proof that reality has a liberal bias.

Splitting Image

@Chetan Murthy:

This has been the core problem of the internet since its founding. In theory it allows everyone with a product to connect with anyone who needs that product quickly and efficiently and vice versa.

In practice, the internet created a way for a few rich assholes to insert themselves between suppliers and consumers and direct traffic to anyone willing to pay them money to do so.

The answer to almost any variation of the question “Why does this suck nowadays?” is almost always “third-party advertising” and its bastard children “hoovering up data”, “increasing engagement”, “generating clicks”, “sponsored content”, “sign in with facebook”, “click here to accept notifications”, and “gimme your email address”.

pat

I’ve been thinking lately about what it will be like for kids to grow up using a piece of equipment that can tell them all sorts of correct or incorrect stuff, but this is something that really makes me wonder what the world is coming to. How can it be acceptable to lure kids into sexually explicit “chats” but we have to ban books in the school libraries that might mention homosexuality?

sab

Mark Zuckerberg is more dangerous than Elon Musk because he is more competent and more law abiding, as is everyone in America except Trump.

Elon Musk is an idiot ( possibly an idiot savant but I doubt that.) It is severe blemish on our national legal system that Elon Musk has been running around unchallenged for twenty years. Just because he has been running around unchallenged everyone thinks what he has been doing is okay, and that is not the case. OSHA and the SEC should have major cases against him, but because the vast bulk of his wealth is from USA government contacts everyone who should be looking is either afraid to look or thought somebody else had already done so.

Chetan Murthy

@pat: Even the good private schools here in SF are apparently all-in on teaching kids to use AI. -Use- AI. I tell my sister that she should expressly forbid that her children are thus instructed, and instead, make sure that they get the mathematical education needed to -program- and -invent- those AIs.

“prompt engineering”, -feh-. I remember when Doonesbury lampooned that shit. 100% accurate.

pat

@Chetan Murthy:

So kids will be taught to use AI? That sounds a bit… scary.

I guess if it is here to stay, everyone should learn how it works…???

Chetan Murthy

theneuron.ai/explainer-articles/doctors-got-worse-at-cancer-screening-after-using-ai-helpers

We shouldn’t be surprised at this. William Langeweische wrote about this in the context of that airline disaster off Brazil where the pilots simply weren’t able to take command of the plane in a crisis, b/c they’d relied too much on autopilots. The only tasks we should allow our children to use AI for, are those where we’re pretty much dead-certain that humans will no longer have to know how to perform. Otherwise, you’re just setting your child up to lack the skills to make their way in the world.

It’s fine to use an AI to help write your ad copy: once you’re a senior copywriter with a stable position, savings, etc, etc, etc. For anybody else, it’s a recipe for ending up jobless.

Chetan Murthy

At my niblings’ previous (private, pricey) school here in SF, at a school meeting for parents, the school presented their approach, which consisted in iPads and AI instruction. My sister asked about how children could opt out completely from the AI portion, and she was told that the school wanted to prepare children for the modern workplace, the modern world. This is, remark, for TEN YEAR OLDS.

I’d say these teachers are insane, but really it’s just another example of management optimizing for their bottom line: better teachers cost more $$; iPads are cheap in comparison. iPads with AI onboard are even cheaper (fewer teachers!) At age TEN, the goal ought to be to get them to spend HOURS reading a book or TEN books, not interacting with AIs.

It’s all ridiculous, when it’s not insane.

pat

@Chetan Murthy:

TEN YEAR OLD KIDS have to learn AI? Insane is right.

Chetan Murthy

@pat: My sister has told me stories of the other parents at soccer games and such: they use their phones and tablets as soothing/calming devices to keep their kids quiet during these events, so the parents can pay attention elsewhere. And these are well-educated professionals, not people who barely scraped out of high school. I tell my sister that they clearly didn’t understand -why- they were learning all that stuff in college and grad school.

Ah well.

Sister Golden Bear

@bbleh:

Absolutely concur, based on my decades there.

Chetan Murthy

@pat: I will continue to relate the insanity. From both my sister, and from a friend (CS prof at Boston-area uni) I have heard that these days, schools use graphic novels more and more in instruction. That is, kids don’t read real novels, real books, they read cartoon books -instead-. My sister told me that the high school attached to my niblings’ previous (private) school stocked almost exclusively graphic novels, b/c that’s what the kids were reading.

It’s all getting outta control.

Another Scott

@Major Major Major Major: Good to see you back here.

Made me look. arXiv.org:

So lack of honesty and bad faith in one area can carry over into others? Whodathunkit??

;-)

Thanks.

Best wishes,

Scott.

Baud

pat

@Chetan Murthy:

No doubt, we are entering a new age. I myself (retired, not working, nothing to do but cruise the intertubes) can’t imagine this happening to a 2-year old, thumbing his mother’s phone and finding who knows what….

OK, what’s the saying, something about an onion on the belt?

Anyway, not having children and grandchildren is a comfort to me in my old age. On the other hand, who is going to take all the old stuff I have to get rid of?

Chetan Murthy

I hear that “death cleaning” (before you die) is becoming a thing. If I emigrate, I’ll be ditching literally everything other than a few disk drives’ worth of data. All my books (amassed over 40yr).

Major Major Major Major

@Another Scott: There was a kind of freaky one recently where they

RevRick

@Chetan Murthy: My dad had a copy of the very first LP produced by CBS Laboratories. It was not available for purchase. He was buddies with a sound engineer. It was a recording done in a burlesque theater.

pat

@Chetan Murthy:

“disc drives of data” Is that like the Rosetta Stone or something?

Major Major Major Major

@Chetan Murthy:

Yeah, I know a lot of families like this, and ours will definitely not have this in her life (or I say that now…). Watching a kid watch youtube or tiktok is horrifying, and using your pocket torment nexus as your main tool for learning, socialization, and comfort is… troubling when adults do it, so I can only imagine what it’s doing to kids. I’m surprised by how many silicon valley types I know who are all-in on giving their kids electronics and wiring up their homes, since when I was younger the people who trusted technology the least were the ones who understood it the best.

Camille will probably be taught responsible AI usage though, eventually. Better to learn it from us…

trollhattan

@pat: It’s the 2005 version of binders of women.

Marc

My very first job with a Silicon Valley startup in 1982 that eventually seemed to be an investment scam. The development team was housed in a drafty ex-church in Acton, MA. Management, marketing, and sales were an entire floor (mostly empty) in one of the glass boxes at the end of Rengstorff in Mountain View (now one of numerous Google buildings). They produced a lot of very pretty brochures and technical reports, and we got just enough hardware/software working to for that critical first round of investment, then the company shut down, and most of the money raised apparently disappeared into someones pocket. Every once in a while I’d see some of these people once again involved in a startup. Then there are the “legal” scams, like circular agreements that allow one set of companies in a VC investment portfolio to “buy” services from another such company, thereby generating a “revenue” stream and increased capitalization with no actual money changing hands

The problem now is simply that the investment scams represent about half the current economy.

Chetan Murthy

@Major Major Major Major: I don’t know many friends with kids. But both families I know, they have rules about devices. One friend, I., tells me that it’s a thing in their neighborhood: their across-the-street neighbor has a lockbox, and all devices go in there when people get home (including adults). I’m guessing they have a computer on which they can receive phone calls. [I use Google Fi, so can receive/make calls on any device with Chrome]

Chetan Murthy

@Marc: “When the capital development of a country becomes a by-product of the activities of a casino, the job is likely to be ill-done”, JM Keynes, General Theory of Employment, Interest and Money Ch 12, p142 in Google Book edition, Atlantic Publishers

The rise and rise of crypto under the aegis of our Feds is a part of why I hastened to get out of dollars (except for what I need to live on for the next few years).

Another Scott

@Major Major Major Major: Interesting. But not really surprising, is it? (I’m no expert on these things.)

By not surprising, I mean, if these models are looking at a zillion probabilities and the instructing AI collapses the wave function and comes up with an answer, then shouldn’t the “child” AI come up with a similar answer just from the way the probabilities have been adjusted?

Why would an AI “like” an owl over a partridge? The probabilities got adjusted, and even if there wasn’t a direct connection to the child, just nudging the probabilities in the model would seem like it would have to carry through to the child. I guess if you want to prevent that, then the models have to be distinct per user and start from a known initial state. But, of course, they want these models to get better so they have to be modifying them all the time based on how they’re used and what they’re asked…

At least that’s how I picture it. Corrections welcome.

It’s good that they’re checking for things like this, and thinking about the implications.

Thanks.

Best wishes,

Scott.

Major Major Major Major

@Chetan Murthy: We have rules about meals and device use during movies and stuff, but nothing so strict. But we’re pretty digital. I joke that Camille’s first computer will be a desktop with an Ubuntu command line and if she wants YouTube she can figure out the rest, but I’m not sure I’m actually joking.

Chetan Murthy

[Insert joke about how GenX are the only generation who knows how these dang things actually work]

Sister Golden Bear

@Chetan Murthy:

@bbleh:

@Major Major Major Major:

My take—as a product/user experience designer where much of my job as senior level-IC is translating/negotiating between “business,” product managers and developers—is there’s truth to all of this, but some additional nuances.

The rot definitely starts from the top, especially as the industry has moved away from the quaint notion that you get/retain customers by building a better, more useful, more desirable product. Today it’s all about hyper growth until you can lock in customers, don’t have to spend money on improvements, cut costs (and user experience) and strip mine as much money from them as you can. I.e. it’s an extraction business model.

And yeah, across all industries, there’s a higher than normal percentage of sociopaths in higher leadership roles. The fact that many people are…. less attuned to social relationships…. only aggravates that.

OTOH, it’s also true that, like engineers, there’s a lot of programmers who are highly-skilled, but poorly educated, and also suffer from the notion that because they’re knowledgeable in one domain, that makes them know everything about every other domain. Or at its most benign, often creates huge blindspots. To ease of use for mere mortals, to (intended and unintended) consequences and ramifications, to “yes it’s cool tech, but what problem is it actually solving,” etc.

Plus there can be a tendency towards “magical thinking” (disguised as techno-arrogance), which isn’t unsurprising given that coding (which I’ve done myself) involves lots of failures until you figure out the correct

incantationcode. Hence when you got ahammertechnology every thing becomesa nailsolvable by an app.This great column sums up many of the issues—and why so many long-time product/UX designers have left the field: How to not build the Torment Nexus

ruckus

@Mathguy:

I bailed on FB quite some time ago, to me it seemed it was past being useful and had morphed into something far different than it supposedly started as and became far less in any way satisfying.

Major Major Major Major

@Another Scott: Let’s speak in terms of genetics. Certain populations disproportionately show off various traits, sickle cell anemia and tay-sachs being two classic examples in terms of disease. There are also ‘spandrels’, things that serve no purpose but are so close to things that do that they’re selected for anyway; the canonical example of this is the human chin.

The ‘evil vector’ research suggests that, in transformer-architecture systems like LLMs, similar things happen with ideas. So you crank up sociopathic coding, you also crank up loving Hitler.

The owl research showed that this happens with things that are, conceptually, as far apart as you can imagine. They turned up the owl vector and now the random numbers it outputs are secretly about owls too. As humans, we have no particular mapping of owl:number:owl in our minds, so this is surprising. These models consist entirely of word:number:word mappings, though, it makes sense if you sort of squint, but also shows that we don’t understand them nearly as well as we’d like.

It would be like if a society valued certain finger-length ratios and ended up with a male population that was disproportionately gay.

SiubhanDuinne

@Mr. Bemused Senior:

Ah. “The Old Dope Pedo.”

Chetan Murthy

I wonder how long the random number string was that was used for that later phase of training? I assume it was “reinforcement learning” that waas used in that later phase ?

mrmoshpotato

WTF?

ruckus

@Sister Golden Bear:

I’ve noticed over the last 3-4 decades that as the country grew there were people that got moved up the employee chain that often really didn’t belong up that chain. Some learned the difference between being a leader and being a follower, but quite a few didn’t. And when they got position without the skills, and or concepts of even minimal leadership and only learned power, things do not go well. I saw this in the USN, not as often but it still happened. And worked out about the same.

dnfree

Deleted because of Redis duplicate.

dnfree

@Baud: The US is mostly concerned about the wellbeing of children before they’re born.

dnfree

@Gin & Tonic: I’m so old I can remember when TV was going to bring culture to the masses—ballet, symphony orchestras, maybe Shakespeare. Reality TV was not foreseen.

ruckus

@Major Major Major Major:

It will be in her life, it isn’t going away. Better for her to be taught properly about important things in life.

Many in my age group (senior for a not insignificant number of years) never learned this lesson because our parents didn’t because much of modern life has come about long after folks my age were born. (the transistor had only just been invented, now you can’t buy a vacuum tube in any supermarket or likely anywhere else. We were very happy to have vacuum tubes sold in supermarkets (if you had one anywhere close) and 9 inch TVs and Radio Shack vacuum tube radio kits to build when a kid) This is by far a different world than when more that a few of us old farts still alive were kids. Dad’s parents brought him from Kansas to Los Angeles in 1918 when he was a year old, in a horse drawn wagon on dirt roads the entire way. This world has changed extremely significantly in the lifetimes of some still alive today. (Have a 99 year old neighbor, she rode motorcycles for decades, long before this old fart was even old enough to). One piece of equipment I had to deal with in the USN was the squawk box, a device to talk on to various points on board ship. The transistors in it were huge compared to what you get in your cell phone. Our ship was relatively new, launched in late 1960s and had no vacuum tube electronic equipment. Today the number of transistors in your cell phone is likely far, far more than most people would think.

Timill

@Major Major Major Major: “Why is it always owls and never flowers?”

Jim Appleton

@Chetan Murthy:

Yeah, my own experience is that the algos are most often way off, they can’t figure me out mostly.

But these are the end of the buggy whip days for AI. They’ll soon be prancing V12s.

NaijaGal

@Baud: Careless People by Sarah Wynn-Williams points out Facebook’s complicity in the Rohingya genocide in Myanmar and the fact that Zuckerberg initially decided he wanted to run for president of the US after Obama dressed him down for enabling Trump’s 2016 win. I’m not surprised about the bodyguards.

ruckus

@Gin & Tonic:

I’m so old I can remember when there was zero concept of the internet.

Chris T.

@Timill:

Dead thread but I just have to add this: The owls are not what they seem.