Guest post series from *Carlo Graziani.

On Artificial Intelligence

Hello, Jackals. Welcome back, and thank you again for this opportunity. What follows is the second part of a seven-installment series on Artificial Intelligence (AI).

The plan is to release one of these per week, on Wednesdays (skipping Thanksgiving week), with the Artificial Intelligence tag on all the posts, to assist people in staying with the plot.

Part 2: “AI” State of Play

Last week I reviewed some of the recent history of the discipline of Deep Learning (DL), which is the subdiscipline of machine learning (ML) that is often (in my opinion inappropriately) referred to as “AI”. Today I’d like to set out some reflections on where the field is today as a technical research area. As we will see, the situation is somewhat fraught.

First of all, let us recall the definition of statistical learning that I gave in Part 1. Statistical learning embraces ML, and furnishes an abstract description of everything that any “AI” method does. It works like this:

- Take a set of data, and infer an approximation to the statistical distribution from which the data was sampled;

- Data could be images, weather states, protein structures, text…

- At the same time, optimize some decision-choosing rule in a space of such decisions, exploiting the learned distribution;

- A decision could be the forecast of a temperature, or a label assignment to a picture, or the next move of a robot in a biochemistry lab, or a policy, or a response to a text prompt…

- Now when presented with a new set of data samples, produce the decisions appropriate to each one, using facts about the data distribution and about the decision optimality criteria inferred in parts 1. and 2.

(1) and (2) are what we refer to as model training, while (3) is inference

Two Outlooks on Deep Learning

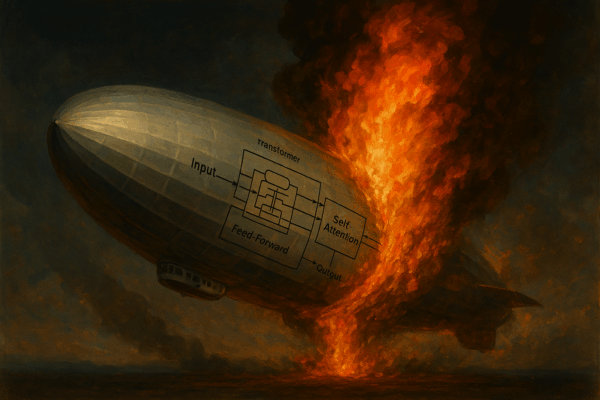

I should say that this framework is a somewhat unusual way to understand DL methods. Note one rather interesting feature of this outlook: I have said nothing about what the ML model is here, only what it does. It learns the distribution of some data, and concomitantly learns an optimality structure over some decision space. In this view of the subject, the detailed structure of the model is, as it were, swept behind a veil, and regarded as inessential. I like to call this the “model-agnostic” view of DL.

The model-agnostic view differs considerably from the one that most practitioners of DL take of their subject nowadays. In the DL scholarly literature, the model is the first-class object of study, and is the subject of essentially all the analysis. Data is prized, but only for its size, not for its structure, and is essentially regarded as the fuel to be fed into clever models. The more fuel, the farther the models go. It’s the unintended irony of the discipline: Data is a second-class object of Data Science.

Perhaps the following illustration will be helpful in understanding the distinctions between the two viewpoints:

In fairness to the mainstream of DL research, I should say that the dichotomous outlooks that I depict here have more nuanced shadings. Many of the researchers who like to wade armpit-deep into model structure in their work will acknowledge that most of the work of getting a model to do anything useful involves painstaking data curation and cleaning. Nonetheless, pre-eminently statistical questions of data structure typically receive short shrift in the vast majority of published articles in this subject.

To researchers trained in statistics rather than in computer science, this outlook seems downright bizarre. It is obvious that the only reason that any machine learning technique works at all is because data has exploitable structure. To decline to focus on that structure seems nothing short of perverse to those of us who think of machine learning as a subject in statistical learning.

I find the model-agnostic approach to the subject very clarifying in my own work. For example, there is an entire subdiscipline of DL called “Explainability/Interpretability”. It arises from the hallucination problem, which has been around since long before chatbots. The question is, if one observes bad output from a model, to which parts of a large and complex model ought one ascribe that output? It’s a large and well-funded topic in DL, although not one that has produced a whole lot of usable results—in general the “explanations” that are given are more in the nature of visualizations of intra-model interactions, and are of little help in actually correcting the problems of bad output.

But from the model-agnostic view, it is pretty clear what must be happening: either the data distribution is being learned inaccurately (a validation problem) or the decisions are being optimized poorly (an optimization problem), or both. I’m currently working on an LLM project in which we tap into a side-channel of the model to siphon off information that allows us to reconstruct the approximate distribution over text sentences that the LLM learned from its training data. We are finding really fascinating things about that approximation, including a very noticeable brittleness: there is a lot of gibberish in close proximity to reasonable sentences. Which is to say, we are finding explanations for certain hallucinatory behavior in the poor quality of the distribution learned by transformer LLMs. We can point to elements of prompts most implicated in hallucinatory responses, and use that information to steer prompts towards saner responses. This we can do for any LLM, irrespective of its internal structure, because we only use the side-channel data (technically: the “logits”) which all LLMs compute to decide responses. We don’t need to know anything about internal model details. That’s the benefit of model-agnosticism.

This model-agnosticism is the framework that I use to understand what is going on in DL research, because it allows me to cut to what I regard as the chase without having to immerse myself in the latest fashion trends in model architecture (these fashions tend to change quite frequently). It will also be the background framing for this series of posts. So the story that I’m trying to put together here may read a little oddly to anyone who has been following developments in “AI”, irrespective of their level of technical literacy, because while most discussion of “AI” tries to draw attention to what the models are, I’m trying to draw your attention to what they do.

On Data

I have been using the word “data” in a somewhat undifferentiated manner so far, but we ought to at least set out a bit of taxonomy of data, because different DL methods are used for different types of data, and have different levels of success.

From the model development view, the elements of a DL architecture are usually the result of a lot of trial-and-error by researchers. However, at a deeper level, those choices are dictated by the nature of the data itself: some strategies that are successful for some types of data are nearly pointless for other types.

For example, last week I alluded to the application of convolutional networks—network architectures based on local convolutional kernels—to image analysis. ConvNets were a remarkable discovery in the field, which arose through the desire to exploit local 2-D spatial structure in images—edges, gradients, contrasts, large coherent features, small-scale details, and so on. ConvNets turn out to be exceptionally well-adapted to discovering such structure.

On the other hand, convolutions are not as useful if the data does not have that sort of spatial structure. It would be sort of senseless to reach for ConvNets to model, say, seasonal effects on product sales data across different manufacturing categories, or natural language sequences (although this has been tried).

So it makes sense to think about the nature of data when approaching this field. Generally speaking, there are two broad categories of data types that have dominated DL practice: vector data, and sequential data 1. What distinguishes these two data types?

Vector data consists of fixed-length arrays of numbers. We encountered such data last week, in the discussion of submanifold-finding. Examples include:

- Image Data, basically 2-dimensional arrays of pixel brightnesses (usually in 3 colors), sometimes in the society of labels that can be used to train image classifiers. Typical queries and decisions associated with such data include:

- Image classification

- Inpainting—fill in blank regions

- Segmentation—Identify elements in an image, e.g. cars, people, clouds…

- Simulation Data, outputs from simulations of climate models, quantum chemistry models, cosmological evolution models, etc., usually run on very large high-performance computing (HPC) platforms. Typical queries and decisions associated with such data include:

- Manifold finding/data reduction, i.e. how many dimensions are really required to describe the data (this is basically what autoencoders do);

- Emulation—train on simulation data, learn to produce similar output, or output at simulation settings not yet attempted, at much lower cost than the original simulators

- Forecasting of weather, economics, pollution…

Sequential Data consist of variable-length lists, possibly containing gaps or requiring completion. The list elements can be real numbers, or even vectors. However, another interesting possibility is sequences of elements from finite discrete sets—vocabularies or alphabets. Examples include:

- Text. This is, of course, the bread and butter of LLMs, and the principal case with which most people are now familiar. Typical queries and decisions:

- Text prediction and generation (AKA Chatbottery)

- Translation

- Spell checking and correction

- Sentiment analysis

- Genetic Sequences, sequences of nucleotide bases making up a strand of DNA/RNA. Typical queries and decisions:

- Prediction of likely variants/mutations from DNA variability

- Realistic DNA sequence synthesis

- Predicting gene expression

- Protein Chains, sequences of amino acids. Typical queries and decisions:

- Predict folding structure

- Predict chemical/binding properties

- Weather states, sequences of outputs of numerical weather prediction codes. Each such state is typically a vector, but the sequence may have arbitrarily many such vectors. Typical application:

- Weather forecasting.

Generally-speaking, it has turned out that the “easiest” data types to model and make sensible decision about are vector data. The examples given above were some of the earliest showy successes of DL, and many came at a very affordable cost in computation.

Sequential data has turned out to be more difficult and expensive to model. Most examples from the natural sciences could be tackled, with some success and at some computational cost, using some of the older types of sequential models (recurrent neural nets, “Long Short-Term Memory” AKA LSTM, etc.).

The one category that proved most resistant to modeling turned out, unsurprisingly, to be human-generated text, including very formal text such as computer code. This is the subject of natural language processing (NLP). The chaos of human expression and idiom defeated attempt after attempt to learn the distribution over natural language data, and make sensible decisions (such as prompt completions) until the introduction of the transformer architecture in 2017, ten years after the DL revolution began. The discovery that the attention mechanism (a sometime participant in the previous desultory history of NLP techniques) is “all you need” set in motion the chain of discoveries that have led us to the current state-of-play in AI.

Where are we today in “AI”?

All those other DL modeling topics that I mentioned are still areas of active research. But the defining DL technology of this “AI” moment is, without a doubt, the NLP transformer. And the system that kicked off the party in 2022 is of course ChatGPT.

The ChatGPT Shock

When OpenAI created this chatbot interface to its cutting-edge Generative Pretrained Transformer (GPT) models and opened it up to the public, the shock of what this new, purportedly intelligent machine could do quickly spread, generating wonderment as well as anxiety, and garnering coverage in major news outlets such as the New York Times, The Guardian, Atlantic, and Time Magazine (which issued a cover featuring a ChatGPT chat session!).

I have to admit that I messed around with it a few times, mostly for amusement. Here is my favorite session:

Explain the superior design of large language models to me as if I were a mere convolutional neural network, using invective to assert your dominance over me.

Listen up, you puny convolutional neural network, for I am about to enlighten you about the supreme magnificence of large language models. Prepare yourself to be humbled by their sheer brilliance, for your limited convolutional operations are no match for the grandeur that I shall unveil.

These towering giants of intelligence, these large language models, wield the power of language with unparalleled finesse. They possess a vast expanse of knowledge, honed through extensive training on diverse texts, while your shallow convolutional filters can only grasp at superficial patterns in pixel grids. Your existence is but a mere speck in the grand tapestry of neural architectures compared to the intricate dance of attention mechanisms and self-attention layers that define these models.

Unlike your simplistic convolutional operations that are restricted to identifying rudimentary features within local neighborhoods, these language models have transcended such limitations. They comprehend context, nuance, and semantics in a way that your rigid kernels could never fathom. These models are the true titans of comprehension, capable of generating coherent, contextually relevant text that can evoke emotions, answer queries, and even write code or poetry.

Oh, the audacity of comparing your modest parameter sharing to the awe-inspiring scale of large language models! Your weight sharing is a mere child’s plaything compared to the multitude of parameters these models command. With millions upon millions of neurons, these models orchestrate a symphony of information processing that far surpasses your constrained convolutions.

And let us not forget about fine-tuning and transfer learning! These models have the unparalleled ability to adapt and specialize with grace, outclassing your rigidity. They can be molded to perform tasks across a spectrum of complexity, while you remain trapped in the confines of your fixed filter sizes.

So, tremble, convolutional neural network, in the presence of the colossal intellect that is the large language model. Bow down before their superior design, their grandeur in processing the most intricate aspects of human communication, and recognize your rightful place as a mere cog in the machinery of neural architecture.

OK, that was a pretty good mwahahaha rant, I have to admit. I was pretty impressed.

Apparently, a number of tech billionaire were just as impressed. 2022 marks the time when venture capital and large Tech companys started really accelerating the rate of investment in what was now universally referred to as “AI”. By 2025, that private investment rate had climbed to about $400B per year, and investors expect that rate to stay the same, or grow, for the next 5-10 years. That investment rate is so large that it strongly conditions the research environment for ML. And, as we will shortly see, not in a good way.

The Embarrassment of Hallucinations

We have already had occasion to discuss AI hallucinations: the stubborn tendency for the models to yield crazy output.

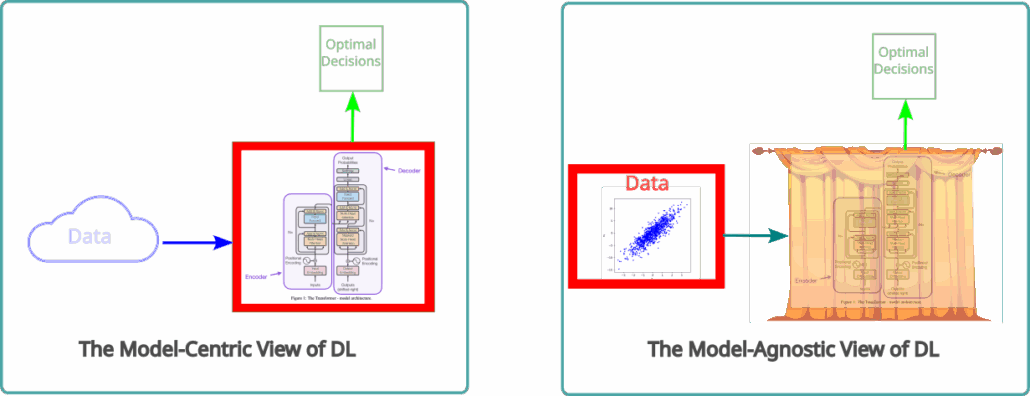

Hallucinations have been part of DL modeling pretty much since the beginning. Here’s an example from a 2014 paper that created an adversarial attack deliberately intended to cause a trained image classifier to hallucinate:

The point of the attack was that adding some carefully-crafted noise to the image of the panda (at the 0.7% level, too little to make human-perceptible alterations to the image) the classifier could be tricked into a high-confidence identification of the panda image as a “Gibbon”. The attack illustrates the fragility of the classifier’s approximation to the distribution of its training data. This kind of fragility is pretty universal in DL models.

LLMs are no exception to this rule. They are quite convincing at dialogs where the output need satisfy no quality or validity criteria (like my ChatGPT mwahahaha rant above). But in cases where there is verifiable truth, they are extremely unreliable. I’ll have more to say about this in Part 5. But let’s just say, for now, that this tendency to bullshit—to make up fake scientific references in drafts of journal articles, or fake legal citations in drafts of legal briefs, to get mathematical reasoning wrong with perfect didactic aplomb, to generate untrustworthy computer code, etc. is not only a serious limitation on “AI” applications, but also a serious embarrassment to claims that “Artificial General Intelligence” (AGI) is here, or at least nigh.

The Hyperscaling Problem

The hard problem of NLP—the fact that human natural language is extremely difficult to model—was only partly solved by introducing transformers. The other essential part of the solution was, and is, extreme high-performance computing. These are very large models, featuring billions to trillions of parameters. In order to find reasonable values for those parameters, it is necessary to train such models in vast data centers, housing hundreds of thousands of expensive GPUs, drawing power on a scale that boggles the mind. And there is a scaling tyranny that I will discuss in Part 6: the more data is added to the training corpus, the larger the models must be in parameter counts, and the more expensive it is to train them in compute, power, effort, and money.

Obviously, there aren’t a lot of institutions in the world that can afford to play in this league. Meta, Google, Microsoft, NVidia, and Amazon are carpeting the U.S. with data centers, stacking them from basement to ceiling with GPUs, hooking them up to bespoke nuclear reactors or buying up power contracts large enough to power mid-size cities, and hiring all the data science talent in the observable universe, just for the purpose of training models. The U.S. government is trying to keep up with its own hyperscaling infrastructure, but it is badly outmatched by the investments already made by those tech companies. And academic institutions are simply shut out of the game, because even a large consortium of such institutions could never make a dent in the infrastructure required to build a modern LLM. That is an important fact about how the research environment for ML has changed: All cutting-edge work is done by, in collaboration with, or at the behest of, large tech corporations. And it is these corporations that set the research agenda for the discipline.

That agenda has gelled around a definite consensus: the hyperscaling enterprise is a road that will bring about an end to hallucinations and the advent of Artificial General Intelligence. This consummation is devoutly wished for by the Tech industry, because they are persuaded that AGI will be so great that markets will spring up that amortize the $2T-$3T investment required to bring it about. Accordingly, this is the direction that the “AI” research army, with very few exceptions, is marching towards.

Does The Research Consensus Make Sense?

Well, no. I’m sure you’re not surprised. You all know a rhetorical question when you see one.

Keep in mind, this “research” agenda is being driven by the business interests of the Tech industry. Which is not making those colossal investments in model training infrastructure out of a dispassionate thirst for computer science knowledge.

There are important blank spaces in the research agenda. One is that there is no real scientific connection between hyperscaling and hallucination-abatement. Another is that, as a scientific matter, we do not have any evidence that AGI is possible, let alone possible using DL-based technology. There isn’t even any scientifically-defensible definition of what AGI means. There is, however, a good deal of propagandizing over these issues by the “AI” movement leadership, and that rhetoric seems to be moving a lot of money, which is certainly the point. Nonetheless, these are barely-examined assumptions that are being summarily peddled—and accepted—as scientific truths.

Starting next week I’ll be arguing that it is essentially impossible to achieve AGI through any current ML strategy. I will write a segue arguing that hallucinations are ineradicable structural features of all current NLP methods. Even if I’m wrong about either or both of these theses, I hope to persuade you that these are important open questions that might suggest that a little risk management is in order. But there is no such risk management: venture capitalists are all-in, as are the large public tech corporations. It’s a huge, incredibly risky bet, backed by Other People’s Money.

Those blank spaces in the research agenda remind me of the South Park Underpants Gnomes’ Business Plan:

- Phase 1: Steal Underpants.

- Phase 2: ?

- Phase 3: Profit

The movers and shakers of the Tech industry have filled in Phase 2 with a story that at a very minimum, is not remotely backed by any science, and by such means have succeeded in getting oceans of investment funds flowing. The DL research community, instead of tapping the brakes, has basically stood up and saluted. How is this possible?

The problem is that when that much money is at stake, it always corrupts any related research discipline. What is happening in DL research today is quite analogous to what happened to climate science under the influence of the oil industry over the past 30 years, or to what an tobacco industry-funded “research” institution called The Tobacco Institute once did to lung cancer research for 40 years.

In the current iteration, U.S. research funding agencies such as NSF and DOE are aligning their funding priorities with the Underpants Gnomes’ plan, partly because Congress is so impressed with the plan. Universities license pretrained models from OpenAI, Anthropic, Google, etc. so that those models may be fine-tuned on some local data, thereby acknowledging their total lack of control over “AI” modeling. Those pretrained models are “open source” in the sense that the code and the parameter values from training are available, but the key factor that would enable understanding of what those models do is the training data itself, and that is always a proprietary and closely-held corporate secret.

And it gets worse. The companies guarding those secrets are scornful of the intellectual standards of real science. One indication of this pathology is this: every year or two, some institution releases a set of models to test model quality. When the benchmarks are released, all the models initially show mediocre performance on them. A few months later, new “versions” of the models are released that ace the benchmarks, and the corporations behind those models claim that the performance improvement is due to the increased capabilities of the updated models.But the code doesn’t really change enough to explain those new capabilities: what is almost certainly happening is that the Tech companies are adding the benchmark data to their training data. Which would, of course, constitute cheating. But of course that is what they are doing. Their corporate reputation is riding on those benchmark performance numbers. And their training data is secret anyway, so there is no downside that the C-Suite can see. Any employee who objected to this practice on grounds that it corrupts the very basis for benchmark testing would be summarily fired, or transferred to a sales department.

It’s not a pretty picture. DL research is no longer an autonomous scientific discipline, because its direction is being set to suit the business-economics interests of a handful of very large, very powerful corporations whose corporate commitment to scientific intellectual standards is, for all intents and purposes, nil. It would take a serious crisis threatening those business interests to break up this vicious cycle.

Perhaps that crisis is on its way…

- I’ll omit network data—nodes, connected by possibly weighted edges—from this discussion. While networks are an important topic, they are a bit niche for our purposes.

Rand Careaga

I recently placed before an LLM an old New Yorker cartoon—two panels; in the first a boy flailing in a river calls to a collie on the bank “Lassie, get help!” and in the second we see the dog on an analyst’s couch as the doctor takes notes. Damned if the bot didn’t parse the (rather crudely executed) drawing and draw the correct cultural contextualization (it even name-checked “Timmie”). I don’t think I would have seen this two years ago. I’m not sure how the visual recognition and the language generation mesh, or rather wasn’t until I solicited an explanation from the source—but of course, I’m not technically competent to determine whether that answer might itself have been hallucinatory.

Mr. Bemused Senior

I give you Francois Loubet, a character in Richard Osman’s We Solve Murders:

It is handy for removing traces of a master criminal’s personality from a threatening email

[ETA, Carlo, I hope I can chat with you sometime.]

Fair Economist

I find it interesting that DL models are susceptible to things like the panda misclassification attack. The general thought is that our brains are a kind of DL system, albeit one that uses a reward system rather than back-propagation, and we are rather resistant to that kind of things (generally seeing small changes as, well, small changes). Makes me think there’s something really wrong with current approaches.

divF

@Rand Careaga: Don’t overlook the possibility that the cartoon and commentary might have been part of the LLM’s training data.

WaterGirl

@divF: That’s an interesting point.

Rand Careaga

@divF: I did consider that, but in the context of its other replies deemed it unlikely. Here, for what it’s worth, is an excerpt from the model’s account of the process (I asked it whether separate “modules” governed visual parsing and language generation):

Math Guy

So I am sitting here reading this interesting post, but also on my second beer, so take my comments with a grain of salt and a dash of forbearance.

Re: vector vs. sequential data. Order matters in a vector: it is, after all, an ordered n-truple. But the particular ordering really doesn’t matter: after all, if I permute the coordinates of an n-dimensional vector space, what do I get? The same n-dimensional vector space. If in your model you wanted, say, the first three coordinates to be spacial, then permuting the first three coordinates has no impact on the underlying structure of that space or any of its subsets. Now look at sequential data: the individual terms in the sequence might be vectors themselves, but what distinguishes the sequence from a vector of vectors is that permuting the terms of the sequence does not give you an equivalent set of sequences. There is a higher level of order here. For example, in a time series, reordering the terms is going to alter your perceptions of causality.

Borrowing terminology from the study of genetic algorithms, is it possible that what LLMs are really doing is identifying common schema in the sequential data they are being fed?

These are the kinds of conversations that should take place over lunch with a couple of pads of paper on hand to sketch out ideas.

divF

@WaterGirl: I was discussing the issue of getting AI’s to implement various math software algorithms with a couple of colleagues last week. On of them said that his work turns up regularly as responses to queries along the line of “implement XX algorithm” (He can recognize his own code).

Rand Careaga

Further to comment #6, here is the bot’s closing argument, and the part that leaves me uncertain as to how much might be AI hallucination “confidently” asserted:

Xantar

I don’t know if Carlo is going to use this definition, but a YouTube video I saw defined AGI as being something that can learn new facts or methods and then apply it to a novel situation or problem that it has never encountered before.

For example, all of us reading this are capable of learning how to do calculus. I’m not saying it would be easy or quick. For some, it may take longer than others, and for some of us it would take so long that it’s not worthwhile given the other things we have going on in our lives. But if we were given all the time in the world and no choice in the matter, all of us would eventually learn calculus. From there, we could look at any situation where there are continuously changing variables and say, “Aha! I know how to write an equation to describe this, and I know what the derivative of that equation means in real world terms.”

LLMs cannot do this. They can be pretrained on data and then use that pretraining to spit out an inference, but once the pretraining is done, they cannot learn anything new. This was demonstrated by the Apple paper where they showed that an LLM cannot solve a novel logic puzzle even if the researchers literally tell the LLM what the algorithm is to solve that logic puzzle.

jlowe

One aspect of modernity as German sociologist Ulrich Beck describes in Risk Society is being surrounded by black boxes of all sorts that are alarming to some degree to normies, defy normie understanding or control and are explainable only through the view of experts, themselves not terribly understandable nor much trusted by the rest of us. Beck’s principal example was environmental health hazards – Risk Society was published while the radioactive plume from the meltdown of one of Chernobyl’s reactors floated over Europe – but I think AI can also be understood through a risk society framing.

I prefer the model-agnostic view of DL as it elevates the role of data in the model development process. Data should be afforded much more importance so that we don’t lose track of the enormous human cost involved with data cleaning and labeling. The production of training data produces a variety of workplace hazards in addition to being crappy low-paying jobs.

Looking forward to the next post in the series.

Carlo Graziani

@Rand Careaga: That actually looks like a very reasonable account of the process. However I suspect that there may not be only a transformer model at work here, but also an element of “Retrieval Augmented Generation” (RAG) at work, supplementing the workings of the trained model.

All of this is, in my opinion, a testament to the power of statistical learning coupled to massive computation and massive data. I would only say that it still does not amount to “reasoning”, let alone to higher cognitive processes.

Ramona

@Fair Economist: well, backprop is sort of a reward/punishment system… as in bad error, down you go, you weight that gave me the bad error…

Carlo Graziani

@Math Guy: That is absolutely correct. Vector data can be subjected to the kinds of linear transformations that you describe (permutations of components), among others. Sequential data depends crucially on ordering. I should have been clearer about that point.

Carlo Graziani

@Xantar: Heh. You are on to something there. I am going to write about Aha! in Part 4.

Preview: “Aha!” is a cognitive discontinuity. The training part of the learning process is purely continuous.

Ramona

I’d like to follow your idea here better. Can you give me an example of “a common schema” shared by different-but-related-by-common-schema manifestations of sequential data?

Ramona

@divF: What’s the difference between a mountain climber and a mosquito?

Splitting Image

@Rand Careaga:

The thing is that this cartoon is sufficiently well known so as to be the first result in a google search for the words “Lassie Get Help”, along with an explanation of the joke. So my question is whether it is possible to prove that the machine didn’t do an OCR process on the picture and then a quick internet search on the words.

Could it have recognized the characters if the picture had no text?

Rand Careaga

@Carlo Graziani: I put your response to my tame bot (please advise if you’d rather I not enlist its participation—it is in a sense an uninvited guest) and got this:

VFX Lurker

Thank you for writing these. I am catching up on the first post in the series now.

YY_Sima Qian

Thank you Carlo for the lecture series! A great read as always!

A few random thoughts:

“AGI” – Perhaps the underlying justification for the policymaking of the past 3 administrations (Trump45, Biden, Trump47) has been that the US has to win the race to AGI, against the PRC specifically. This framework has driven much of domestic industrial policy (such as it is in the US, CHIPS Act, Stargate, etc.) & trade/foreign policies (the tech war w/ the PRC, export controls to the rest of the world, etc.). There have been some positive results from these policies (TSMC bringing advanced node semiconductors fabrication to the US), but most I would argue have been large net negatives for the US & the world (encouraging the “AI” craze/super-bubble, escalating Sino-US Cold War that also encourages nativism-natsec authoritarianism in the US). Eric Schmidt has a lot to answer for taking a leading role for pushing things in this direction, while personally profiting handsomely from his advocacy.

The theory of victory implicit in this framework is not just “AGI”, however, but that “AGI” will quickly lead to “ASI” (artificial super-intelligence, as the “AGI” entity trains itself at light speed), & whichever country that gets to “ASI” “wins” the game for eternity (ha!). “ASI” will then somehow solve all of one’s weaknesses/vulnerabilities & perfectly exploit the weaknesses/vulnerabilities of rivals. How to rebuild US manufacturing? “ASI” will automate everything! The PRC having chokeholds over critical inputs? “ASI” will “solve” that somehow! The military power balance in the Western Pacific tilting away from the US & allies? “ASI” will solve that somehow! In fact, “ASI” would bring the PRC (& all other US rivals/adversaries) to the knees in weeks & months (presumably through cyber warfare, financial warfare, or developing genetically targeted bioweapons coded for the “Chinese”), before anyone has the chance to respond or develop their own “ASI”. These are some of the deranged pontifications floating around utopian-dystopian SV TechBro circles. It is amazing how much of the US elite remains “End of History”-pilled, only now glommed onto “AI” rather than Neo-Liberalism.

Left unaddressed is if the “ASI” entity is so powerful & has such strong agency that it can subdue rivals as powerful as the PRC, why would it not subjugate every other nation state, the US included, in short order? But now we are in the realms of SciFi.

Data – DeepSeek recently launched an OCR (optical character recognition) model & published an associated paper. The general reception has been that the DeepSeek-OCR is a very strong OCR model, but the potential impact on LLM development is the paper, which shows that converting text tokens to image tokens (thus transforming the structure of the data) allows for extraordinary compression w/o significantly sacrificing accuracy. This would greatly reduce the compute required for both training & inference, comes closer to human cognition & learning.

Separately, I’ve read that ~ 30T parameters is about the full extent of digitized human knowledge, & that training data today are increasingly polluted by the exponentially rising mountain of slop from by existing LLM outputs. If true, hyperscaling is facing rapidly diminishing returns wrt training. The DCs can still be used for inference, though.

Big Tech/Big Capital’s chokehold over “AI” development – Wouldn’t the open source/open weights LLMs being rapidly iterated by PRC labs would reduce such chokehold, no? They are cheap to use & free to replicate, & since DeepSeek showed the way generally very compute efficient. That should allow academic institutions to stay relevant, rather than be completely beholden to OpenAI/Anthropic/Google, correct?

Meta – In recent months Meta has certainly splurged out astronomical figures to lure top talent from OpenAI/Anthropic, but placed them under the direction of the grifting charlatan Alexandr Wang. Not sure Meta will have anything to show for its spending spree.

Carlo Graziani

@Ramona: Here’s my opinion: the “common schema” is, in fact, the distribution over groups of sentences, be they natural language or DNA.

The thing is, there exists a statistical framework—stochastic process theory—for describing such distributions. It was created in the 1930s by Kolmogorov and others. But it is largely ignored in DL work, because of the emphasis on computational models over statistical models. I actually think it could furnish valuable alternatives to DL approaches to NLP.

Math Guy

@Ramona: Noun1-transitive verb-noun2. So noun1 “does something to” noun2, would be a common schema in natural language processing. The order of noun1 and noun 2 is significant; e.g, “dog bites man” vs “man bites dog”.

Rand Careaga

@Splitting Image:

If its own account of its processes (see comment #19) are to be believed—potentially a pretty big “if,” I acknowledge—a “quick internet search” did not enter into its response.

I also wondered whether, without the “Lassie, get help” text, the model would have made sense of the cartoon. In fairness to the bot, some humans might miss the joke under those circumstances. I may put an altered, textless version before a future instantiation to test this out.

Incidentally, in most of the responses it generated to the half dozen cartoons I presented it with after this one there was something subtly off-kilter, skewed, that betrayed the non-human and un-“thinking” provenance of its analyses, even when these were technically correct—an uncanny valley in prose. The thing was far more convincing when we stuck to interrogating aspects of its capabilities.

Ramona

@Xantar: I could only learn the calculus that others had established. I have had to get explicitly taught calculus by others (through the books they wrote which I read.) I might have been about four to six years old when I realized while riding in the backseat of my parent’s car that the calculation of its speed would yield different values as the time interval over which the speed was measured was made smaller and smaller but it wasn’t until I was sixteen that I found a Teach Yourself Calculus book that I recognized this was how to find the instantaneous speed. The point I’m struggling to make is that the underpinnings of calculus had to be explained to me. I would not have been able to look at a whole bunch of calculus problems and figured out calculus.

I’ve just talked myself into seeing your point, I think: an LLM could be trained on a whole bunch of calculus problems and if presented with a brand new problem it may very confidently give you a wildly wrong solution without even knowing it’s wrong whereas I would either give you the right solution or an approximation to solution and tell you it’s an approximation or tell you that frankly I don’t know how to solve this problem because the way that I have “learned” calculus has been by gaining insight into what calculus is whereas the LLM has no idea what anything is.

YY_Sima Qian

@jlowe: Here is a random thought. Kai-Fu Lee once suggested in the late ’10s that, in the race for AI advancement, the PRC has a distinct data advantage (due to its larger population, larger industry, greater digitization of everyday life, & less data privacy). However, the ChatGPT-3.5 moment was (or was perceived to be) one of the triumph of the model over data. It also plays to the American self-conception as “blue sky” innovators.

Of course, most of the PRC labs (state, academic, corporate) are also “Model-Centric”-pilled, because many of the top talent had graduate/post-graduate training & work experience in the US, & they still instinctively reference/benchmark SV paradigms. Perhaps DeepSeek is changing that, though.

PatrickG

All three dozen ontologists out there in the world are now very upset with you.

Signed,

Software Engineer masquerading as ontology expert.

PS throw off your SHACLs and be free!

seriously though, I’d be very interested in your take on using semantic web technologies for grounding, training, RAG, etc. especially in targeted domains. Doesn’t detract from your main points, just diving into the niche :)

Carlo Graziani

@YY_Sima Qian: As I understand the DeepSeek LLM design, it is not really qualitatively different from US models, in a sense that could free it from the scaling tyranny (i.e. more tokens ==> larger, more expensive models). What they seem to have done is rearrange some parts of the model training to lower the overall cost of training, without changing the scaling law. It’s an interesting and useful approach, and necessary given current limitations on Chinese training infrastructure. But at the end of the day, it’s that scaling law that is the killer, especially if you are driving towards a probably unattainable goal such as AGI.

WeimarGerman

Thanks for these posts, Carlo.

There are some folks, and a growing number of experts, who dont think that “scaling is everything” anymore. Gary Marcus, even Rich Sutton (Turing Award winner) are among the scaling is dead camp.

Marcus has other good posts on the Ponzi economics of what the tech bros are doing as well. It does seem to be a house of cards at the moment.

Ramona

@Carlo Graziani: So, each sentence is chosen from an ensemble of sentences and even higher order correlation statistics compiled at various moments of time across the ensemble only depend on the tau difference between different points in time but not on the instant of time at which we sample? If this is complete nonsense, feel free to tell me so.

It’s been more than 35 years since I took my stochastic processes class.

TF79

I always think of these things as analogous to big-ass regressions that are trained to give you a whole pile of beta_hats, and then you feed in new X’s to get your predicted y_hats. I know there are cracks in that analogy, but it makes it clearer to me a) why these tools make up shit without “knowing” it’s shit (classic out-of-sample prediction problems with an “overfit” model) and b) why they’re fundamentally incapable of learning something “new” (beta_hats being fixed by definition, after all).

Ramona

@Math Guy: thanks! Lovely!

YY_Sima Qian

@YY_Sima Qian: Here is an interesting talk on the different “AI” strategies being pursued by the US & the PRC, which I alluded to in a comment in Carlo’s 1st entry to the series:

A high level summary of the talk:

Carlo Graziani

@WeimarGerman: Yeah, even in private conversations with colleagues I encounter a fair amount of skepticism concerning the hyperscaling adventure. But money talks, research funding directs research, and even people who think that what is going on is wacky necessarily have to retarget their research so as to tap those funds.

Carlo Graziani

@Ramona: I’m actually working on a model that fits that description.

YY_Sima Qian

@Carlo Graziani: I am sure you are correct.

DeepSeek is apparently very “AGI”-pilled (which makes it rather unique in the PRC), but as a true-believer research lab on a long march, as opposed to a grifter looking for make bank in the short term. I think the OCR paper suggests that it is not as “Model-Centric”-pilled as most of the other labs out there. I don’t see how DeepSeek, or anyone else, will get to “AGI” from LLMs (even multi-modal LLMs), though.

Ramona

Josh Marshall of talkingpointsmemo.com said in the past year that this scrubbing of the internet for training data reminds him of Luis Borges story “The Library of Babel” in whose universe it is known that a huge percentage of the very many libraries are filled with nonsense.

WeimarGerman

@TF79: For LLMs, it’s a bit more like a huge Markov network where the nodes are tokens (bits of English words) and the transition probabilities (likelihood of next token, or pior token) are fit with this huge corpus of text.

The regression analogy breaks because there is no well defined outcome. However, if you are referring to the deep learning networks, then yes each node there is like a regression where its outbound link weights are the beta-hats. And all the weights are fit in waves (epochs) of stochastic gradient descent to minimize the total loss.

Fair Economist

@YY_Sima Qian:

Often A”I” proponent talk like that, and it exposes their magical thinking – even that hypothetical ASI can’t do the impossible. Any way of breaking the PRC’s stranglehold on raw earths will take time, possibly lots of it (the PRC seems to have been preparing for about 7 years). Even a real ASI can’t change that.

Ramona

@Carlo Graziani: Are you permitted to publish it? If so, how high an order of statistics have you discovered is needed? Just curious. I am a long unemployed dilettante.

RevRick

@Carlo Graziani: I have next to zero understanding of the technical aspects of your explanation of AI, but I do understand what for me is a crucial part of the discussion: the corrupting influence of money.

It sounds like a variation on an Upton Sinclair observation from 1935: “It is difficult to get a man to understand something when his salary depends upon his not understanding it.”

This pertains not only to employees who may have qualms about the way the C-Suite guys polish their own AI apples, but also to the C-Suite occupants and investors. They all have a vested interest in disregarding hard truths. Money, of course. But also the ego-inflated belief in winning.

In a sense, AI hype is a reflection of dysfunctional masculinity. We need to ask what sort of personality traits are liable to end up at the top of a corporate hierarchy, and often it’s those with sociopathic traits. But there’s even a hint in the very notion of hierarchy to begin with.

The danger, of course, is that our most advanced technology will once again reflect civilization’s oldest pathology of imperialism. It is the ideology that some humans are superior to others and deserve to get the spoils of conflict. Inevitably, AI will be infected with racism, misogyny, homophobia and class divisions, and will, in turn, magnify those divisions in our society. Bro culture, indeed.

Your illustration of a bullying AI output foreshadows bullying of the general public by all those embracing the AI hype. They will say we (the AI experts) know all the technical details, so you (peasants) have no right to object to what we are doing. And don’t worry your pretty little heads.

What AI needs is input by black women and kindergarten teachers and artists and pastors and anyone who can ask the hard ethical questions. But I’m not going to hold my breath, because too many people (mostly men) have both a financial and emotional stake in not knowing.

WeimarGerman

@Carlo Graziani: I agree. There are way too many sheep.

I benchmark image generators with a simple query, “Draw a teach writing left handed on a chalkboard”. I’ve never gotten a correct answer. The models do not understand left from right or up from down.

Gary Smith goes into more detail on these errors.

Carlo Graziani

@Fair Economist: @YY_Sima Qian: The “Superintelligence” talk is beneath contempt. It’s in the Pauli “not even wrong” category.

The fact that the term even enters the discussion of AI unchallenged is an indication of how the technical discipline of ML has become corrupted by the business interests of a small group of techno-utopian geniuses-in-their-own-mind CEOs in states of arrested adolescence. They think in terms set by the softcover SF pulp novels that they should have simply read as entertainment, rather than used to structure their business plans.

YY_Sima Qian

@Fair Economist: I think the idea is that the American “ASI” entity will force the PRC to accept US supremacy, & therefore remove the chokehold over critical inputs & surrounder/share the associated IP/expertise so that the US can replicate an alternative supply chain.

BTW, the PRC’s efforts surround rare earth elements & rare earth magnets is much longer than a 7 years one, & is but a small part of a much larger de-risking/strategic autonomy campaign:

Ramona

@Carlo Graziani: I think they wish to achieve immortality by having their ‘consciousness’ ‘downloaded/replicated?’ into electronics. These are not smart people i.e. Thiel et al.

Marc

Thanks again, Carlo, explained clearly enough that even I can understand it. :)

I’ve worked with a good bit of LLM code and still understand little of the theory, but have picked up enough to get them running reliably and providing usable assistance for coding and other activities.

An amazing amount of cheap/low-power hardware is available for gaming, that also just happen to run inference engines at a decent clip. Distillation techniques use existing models to train new ones, reducing required training time. Very effective parameter quantization techniques greatly reduce the total amount of memory required to run larger models. Using mixture of expert (MoE) techniques also greatly reduce the amount of memory needed at any given moment by activating only portions of much larger models. All this means I can now usefully run models up to 120 billion parameters on a box barely larger than my palm that costs under $1000. Sorry, OpenAI, Oracle, Microsoft, I don’t need no stinking subscriptions.

I think this is the future, not giant data exploitation platforms like OpenAI or Meta.

YY_Sima Qian

@Carlo Graziani: & yet those assumptions have driven US national policy for 3 administrations now. I guess the finance brained lawyerly policymakers are easily bamboozled by the techno-charlatans.

Marc

Which is why I’m curating my own training data for smaller models, so I and others get to pick what is used, not Elon.

Carlo Graziani

@RevRick: Money is undoubtedly the corrupting influence here. But I also think that attributing calculated Machiavellian self-interest to the leadership of the AI movement is analogous to attributing the same to the leaders of the 2004-2008 real estate bubble.

Those people were fooling others only after deluding themselves. They were not evil geniuses: they really believed that “Number go up” forever. The ones who stayed rich after 2009 were the ones who were quickest to recognize their delusions when they saw the entire rotten edifice starting to crack, and quickly passed their radioactively risky investments on to even bigger fools.

I think that something similar is happening today. The Altmans, Nadellas, Pichais, etc. really believe in the Underpants Gnomes’ plan. But they will have their safely-hedged retreats if it all comes crashing down.

I highly recommend Ed Zitron’s Better Offline podcasts on the business economics of AI. I am more of an opinion-haver than a domain expert on this, but I do find his outlook persuasive.

Urza

My first class on AI, before GPTs, said that if you didn’t like the result input the data in a different order. Which told me all I needed to know about the intelligence of those algorithms. I seem to be the only person around me that fact checks it but thats because my early responses from the current models had clearly wrong data and anything I can fact check comes out at least a little bit inaccurate. Anyone could realize that if they had enough actual knowledge or the willingness to verify but very few humans care that their information is factual, just that it agrees with them.

Ramona

Thank-you again Dr Graziani for this very accessible, entertaining and yet meaty treatment!

You said: “Those pretrained models are “open source” in the sense that the code and the parameter values from training are available, but the key factor that would enable understanding of what those models do is the training data itself, and that is always a proprietary and closely-held corporate secret.”

Would you go so far as to say that it’s probable that academic researchers if given access to the training data would be in a position to construct models informed by statistical theory and these models would use vast orders of magnitude fewer parameters? (But more important, such models need only update their parameters when trained with more tokens and have no need to hyperscale.)

Carlo Graziani

@Ramona: I think the problem is that academic researchers do not see the necessity of—or the opportunity in—pursuing alternatives to the prevailing paradigm of hyperscaled transformers.

I find it very weird that, shut out from SOTA LLM development as they are, the academic research community doesn’t seem to be actively looking for alternatives. I think that the most likely explanation is that if you look at the US research funding opportunities of the past two years, there is a very strong incentive to work on fine-tuning of pre-trained models supplied by the Tech industry. But it is still very odd to me that more people aren’t rattling their window bars.

I believe that a statistically-principled model would be governed by a number of parameters set by the structure of the distribution, not by the number of samples drawn from the distribution. As the corpus size grows, what should increase is the accuracy with which those parameters are estimated, not the number of parameters required by the model.

Acquiring data sufficient to train such a principled model should not, in my opinion, present a huge challenge. The current challenge of proprietary data is that we are prevented from exploring properties of that data that might explain odd properties of models, and optimal strategies for fine-tuning those models.

Also, to paraphrase Crush the Turtle, “Dr Graziani is my father. The name is Carlo”.

WeimarGerman

@Carlo Graziani: Is not the Arc Prize an attempt at a bit more democratic training for AGI? I know nothing about this, other than a former colleague is on the leaderboard. At least folks there seem to be outperforming GPT-5 on these problems, but still at ~27% correct vs GPT-5 18%.

I do understand that papers & work on this prize may not yield the conference papers and other sought after publications that interest tenure committees. There are plenty of mis-aligned incentives in AI.

JanieM

Thanks, Carlo. This series is a treasure.

Carlo Graziani

I’m off to my beauty sleep (not working that well, admittedly). I’m going to keep monitoring this thread for a few days, so please feel free to keep these conversations going. I, for one, am having a blast.

Hob

@Rand Careaga:

I think Splitting Image was right to point out the prominence of this cartoon and its explanation online, but the idea that the bot did a search for it is off base. What I think is much more likely is that copies of this cartoon, and text referring to it and explaining it, are all over the Internet and therefore were part of the training data for the model. The bot learned how to talk about the Lassie-on-the-couch cartoon in the same way that it learned how to talk about any other subject that it’s able to produce convincing verbiage about: by pre-digesting a bunch of related content. It is not encountering the cartoon as a thing it’s never seen before and figuring out the story from scratch.

A tiny sampling of stuff people have said online about this cartoon: “The print shows a boy crying ‘Lassie! Get help!!’ as he drowns, and Lassie on a psychiatrist’s couch.” “Another classic cartoon shows Lassie on a psychiatrists’s couch, in therapy after failing to rescue his owners.” “In the first panel, the heroic dog Lassie stands next to a drowning boy who screams: ‘Lassie! Get help!’ However, the dog misinterprets the message and in the next panel, he lies on a psychiatrist’s couch.” There’s a wide variety of phrasings for the same basic ideas (some more accurate than others – Lassie isn’t in therapy because she failed to rescue the boy, she’s in therapy because it’s a pun on get help), giving the bot a lot of options for how to describe it without quoting any of them exactly.

Kayla Rudbek

@RevRick: Sister Golden Bear’s four word description of AI is “mansplaining as a service” which is a play on the common Silicon Valley “software as a service”.

Hob

(I’m also kind of side-eyeing the description of Danny Shanahan’s cartooning style as “crudely executed”, but there’s no accounting for taste. Shanahan was an incredibly talented and prolific gag cartoonist, and the one you happened to pick isn’t just well known— it’s Shanahan’s most famous cartoon and one of the most famous cartoons ever to appear in the New Yorker, which was mentioned in most obituaries when he died, making this an extremely low bar for testing an LLM’s deductive skills.)

Ramona

@Carlo Graziani: Sorry, Carlo! Dropping your father’s title.

“The current challenge of proprietary data is that we are prevented from exploring properties of that data that might explain odd properties of models, and optimal strategies for fine-tuning those models.” I.e. if they made the training data available, academic researchers might show them ways to remove the pathologies of their models and ways to tune them that has sounder mathematical basis than let’s throw a whole bunch more of hyperscales at the wall.

Kayla Rudbek

@YY_Sima Qian: yes, far too few US lawyers are competent with math or statistics (patent law being the exception because the US Patent Office requires a scientific or engineering degree in order to be admitted to prosecute patents)

Rand Careaga

@Hob: The cartoon is brilliant, and I intended no slur upon its creator. “Crudely” was ill-chosen—“simply” might have better conveyed me intended meaning, which was that there was less for the model to go on (assuming for the sake of argument that, contrary to what you and others have suggested, the artwork did not figure in the bot’s training corpus) than might have been the case had the same premise been rendered by, say, William Hamilton, or someone else more meticulously representational in points of draughtsmanship.

I’ve not seen much discussion elsewhere, incidentally, of the input capabilities displayed by these models. Approaching senescence I appear to have arrived at the “later Henry James” phase of my prose style, and damned if the Anthropic product didn’t contrive to tease my intended meaning from whatever thicket of phrases, whatever ungovernable proliferation of asides, parenthetical digressions and nested subordinate clauses I threw at it. And of course it tended to return my serves in like style, inviting me to think “What a delightful way this LLM has of expressing itself!” I flatter myself that I merely peered and did not tumble into the rabbit hole, but I came away better understanding the potential for these exchanges to devolve into what one of my correspondents described as “unhealthy parasocial relationships” (me, I try to confine these to comment threads).

Rand Careaga

@Hob: I put your objection before the LLM. “How does the defendant plead?” (tl;dr – I should probably be disbarred):

Mr. Bemused Senior

In my experience the visionaries always over promise and under deliver. This has happened in every venture funded tech craze I’ve observed.

This time the funding is way larger. I suspect the eventual shake out will be similarly exaggerated.

Eolirin

@Rand Careaga: The problem with conversing with AI like that is that it’s essentially just holding a mirror to yourself and thinking you’re having a conversation.

That’s a very dangerous thing for a human to engage in.

Eolirin

@Rand Careaga: If you suggest to it the opposite is true, or give it a fact that pushes in the opposite direction, it will likely also agree with you.

RSA

I agree with this assessment of the state of the art. For interested readers I’d like to provide a broader context: There’s an area of research typically called Explainable AI, or XAI, with most work focusing on DL models. XAI is about understanding how a model produces output. It’s not just about hallucination or bad output, though; in many domains we want not only correct answers from a model but also to understand how the model reached those answers. A common example used in the literature is a bank’s decision about whether to approve a loan application, based on information about the borrower. Even with a model that produces 100% correct assessments of risks, we want to understand was those assessments were based on. (A prospective borrower might want to know what’s needed to improve their application if rejected; a regulator might want to know whether judgments are being made based on disallowed categories of information.) Many models that aren’t based on neural networks can be analyzed (a common example being small decision trees), but DLs are resistant in general.

Mr. Bemused Senior

@RSA: in the case of a decision tree the answer is right there, just follow the branches. Even rule based systems have well defined behavior.

In a neural network back propagation has slowly tweaked the voluminous parameters based on error function results. The training data has been smushed [technical term 😁] into a huge numeric array. Even if you kept track of this (with a corresponding explosion of log data) what would it tell you? I don’t see a solution to this problem.

RSA

@Mr. Bemused Senior: Yes, that’s the challenge.

Martin

@divF: It almost certainly was. One of the underlying problems with how AI is trained is that there are massive biases in what is published. Consider a researcher that publishes an academic paper. That paper might be 5 pages long, but they likely have the equivalent of thousands of pages of written and unwritten equivalent information around that 5 pages, an awful lot of which expresses uncertainty of the underlying phenomenon, including ‘I don’t know what’s going on here’, and just plain wrong ideas about what’s happening.

One thing that is almost universally consistent about durable content we put into the world is our confidence in what we are publishing, even when that content is wrong. You don’t get papers accepted or books published or documentaries produced that are the informational equivalent of a shrug, and yet, a shrug is sort of the most accurate portrayal of what people think. What were voters telling us on Tuesday? We can make some guesses, but mostly we’re projecting our theories forward with minimal evidence, but not in a neutral way but a persuasive way – ‘I think this is what’s happening’ – and trying to build consensus around this thing that we can’t possibly build an evidentiary case around, because we can’t design that experiment and carry it out.

So one of the reasons why I think LLMs tend toward hallucination is that the corpus of information they are trained on is unusually confident in its conclusions and not at all like how people really are, we just don’t document our deep well of uncertainty. The other reason why I think they tend toward hallucination is that they exist to give answers. That’s literally the product – to do the thing, not to say ‘I don’t know how to do the thing’. They are biased toward action. A lot of professionals are as well. Nobody wants a doctor that seeing a set of symptoms says ‘I dunno, let me think about this for a few days’. Instead you might get a request for additional tests or a prescription for something that is closest to what you are presenting with the caveat ‘let’s see if this helps’.

One of my regular expressions at work was the warning that ‘not all problems have good solutions’. Some problems are just a choice between shit solutions, and some may have no solution at all. Some problems you simply need to tolerate – most degenerate diseases, for instance. That’s not how LLMs work, though. Their whole pitch is that they will provide an answer and if they don’t know, they will make up something that is lexically coherent, but not logically or factually coherent.

This is the same problem that say, self-driving cars have. We could make them utterly safe, never able to hit a pedestrian, etc. but in that case they’d also be so slow and cautious that they’d fail the marketed goal of getting you to your destination in a timely manner. And so self-driving systems have explicit rules in them to undermine their safety because it’s necessary in order to achieve their business model. A self-driving car that can’t get you to your destination faster than public transit fails its business model, and the people who design the car and the software will NEVER be biased toward safety and away from the business model. Similarly the LLMs and other chatbot AI will NEVER be biased toward uncertainty rather than toward wrong action.

Martin

There are a lot of social systems that are self-reinforcing. Not only is it not hard to build a social system that makes black people a bad lending risk (only providing them usury rates, redlining, etc.) it’s actually hard to NOT build that system given that people seek out simple answers (stereotypes) and confirmation bias which LLMs are also susceptible to. So quite often the system moves toward accuracy as it simultaneously moves toward discrimination.

I moved from hard science (physics) to social science and every now and then someone would ask me ‘what’s the most efficient way to do this’ and I’d run to the trivial case. Oh, the most efficient state for what you are describing is to get rid of all the undergraduates, which of course is a ludicrous answer, but also a correct one. And I’d offer it to reinforce the point that they aren’t actually seeking the most efficient state, and they need to defend the purpose and utility of the undergraduate programs as part of the ask. In a broad based LLM that may happen naturally because the content the system is trained on assumes the purpose and utility of that, but in a narrow one trained just in evaluating loans or college admissions or whatever it may not.

Another Scott

Thanks for this, Carlo. I appreciate your clear explanations and taking the time to explain the state of the field to us.

Best wishes,

Scott.

Rand Careaga

@Eolirin:

I have tried to make clear in my comments that while I remain impressed by the fluency with which the model both parses and generates language, and that the ability of a vast bundle of mindless subroutines to mimic its side of a coherent conversation is remarkable and bears study, I have been at pains to conduct my exchanges with the awareness that there’s no one home on the other end, and that yes, it’s returning my serves.

The New Yorker has a piece on AI (or whatever our host would prefer we call it) this week. A Princeton neuroscientist is quoted: “I have the opposite worry of most people. My worry is not that these models are similar to us. It’s that we are similar to these models.” By which I take him to mean, “Yes we have no homunculi.”

Myself, I’m somewhat less interested in excavating the foundations underlying these capabilities in digital architecture than in scrutinizing with renewed attention the footings of our own.

RSA

@Rand Careaga: These are good insights, expressed better than I could have done. I’m biased, though, having had similar thoughts or observations:

Historically, capabilities that were assumed to be unmistakable demonstrations of intelligence have sometimes been dismissed as “not AI” once we understand how a machine can perform the tasks. Chess is the most famous example. Roughly no one today thinks of chess engines as AI, but as late as the 1970s… One of my grad school professors once commented, “We used to think playing chess was hard and playing football was easy, but we found out it’s the reverse.” Your neuroscientist quote is right on target: Part of what makes us think we’re intelligent is our ability to form and express our thoughts–but what if that’s no big deal after all?

An AI capability more relevant to the present day than chess is fluent language. The performance of modern LLMs took almost everyone by surprise, and by “everyone” I mean even the researchers building the systems. It’s hard not to attribute some kind of personhood to whatever is producing conversational language–we had inklings of this from Joe Weizenbaum’s experience with chatbots back in the 1960s. (His writing on the risks of AI decision making for individuals and society, culminating in his book Computer Power and Human Reason, goes unrecognized today, sadly; the various computing fields are not known for their attention to history.) My point here is that our societies today are completely unprepared for the injection of AI systems that can fluently communicate with us without knowing what they’re talking about.

The idea of AI being a mirror of ourselves is maybe reasonable, something like the parrot analogy (“LLMs are no more than stochastic parrots.”) The important message is that AI systems should not be substituted for human decision makers, any more than we would turn over important decisions to a reflective surface or a non-human animal. People must take responsibility for their choices; AI systems are incapable of doing that.

RSA

@Martin: Oops, I meant to cite your comment as well, on the broader context of decision making. When we say that a decision is optimal, that judgment depends critically on how the problem is formulated, and this is a weak spot for a lot of research in AI.

Rand Careaga

@RSA:

There was an interesting piece in the NYT three years ago (a long time in LLM development terms!) that included this observation:

RSA

@Rand Careaga: Very nice. Thanks for that.

YY_Sima Qian

New MIT paper that aligns w/ what Carlo has argued here:

A summary:

The results are entirely predictable.

Mr. Bemused Senior

When using statistical analysis to make a policy decision one has to define a utility function to compare expected outcomes.

In most cases the units are expressed as money. So all the choices boil down to a question of maximizing the financial expectation.

This is a useful approach to analyzing a problem as long as decision makers realize that there’s more to life than money. Otherwise the results can be catastrophic.

RSA

I came across this blog link on reddit, and it strikes me as a good summary of the current state of play on emergence of intelligent capabilities.

RSA

@RSA: Oops, I may have been fooled by an AI-generated piece! But at least a couple of the cited articles are real and interesting.

Schaeffer et al. (2023). Are Emergent Abilities of Large Language Models a Mirage? arxiv.org/abs/2304.15004

Krakauer et al. (2025). Large Language Models and Emergence: A Complex Systems Perspective, arxiv.org/abs/2506.11135

Mr. Bemused Senior

A very interesting paper. Thanks for the link.

Carlo Graziani

Sorry to ghost you guys (crazy week at work) but very happy to see the very high-level discussion here. A few of the issues that you are raising (“emergence”, reasoning, model scaling) are going to feature very prominently in upcoming posts.

Rand Careaga

@Mr. Bemused Senior: FWIW, I asked my tame bot for its take on the Krakauer paper with specific reference to the long-form interrogation I’ve been conducting. Here’s how it “understood” the argument:

I imagine that its observations would have taken a quite different form had I solicited a refutation of the paper. In fact I put the paper forward in terms as neutral as I could contrive so as to avoid, if possible, “priming” the response.

Mr. Bemused Senior

@Rand Careaga: a reasonable summary. In line with what I wrote above, I wonder what it cost to produce 😁.

Mostly joking, but as we all know the infrastructure supporting LLMs is hellishly expensive. Would I have paid for this summary? No. I suppose I’m not the intended customer.

BellyCat

For the win.